Caching in Python [3AE]

While the Spark in Go is in progress, I recently stumbled on a good use-case which made me think,

“Is it finally the day when I’ll use something I read in an OS class?”,

and the answer is:

There’s plenty of documentation around caching, so I won’t beat around the bush on this one for too long. Just regular memes and some knowledge in between.

Let’s imagine a use-case, and since this is not a system design round, so let’s just keep it simple.

The Setup

Imagine there’s an expensive service (not Netflix), to which the rest of our engineering solution talks to, which is responsible to return some value.

Let’s maybe take a news-reader app (strictly text), where the user keeps going back and forth on different titles (because let’s be honest, who reads more than 10 words in 1 article). So, somehow the UI at the home page needs to fetch every article the user clicks on.

There are 2 ways this can work:

- Talk to the micro-service again and again every time the user clicks its title, and fetch the same article.

- Check it in some magic local space, and see if it was fetched before. Assuming it was, forget solution 1 exists, and move ahead with this.

This magical local space is “Cache”, and the person who uses this is called:

Don’t believe me? Ask the A of MAANG, what cache is and they’d say:

Caching helps applications perform dramatically faster and cost significantly less at scale.

The Build

Let’s talk implementation first and then maybe we can discuss benefits.

Some premise first

talk_to_me() is a function, which gets the request of article_id

ill_talk_to_them() is a function, which fetches the article data for article_id

some_time_consuming_service() is a function which connects with external service to fetch article data

time_this() is a decorator to measure the time taken

Rest, we’ll see as we go.

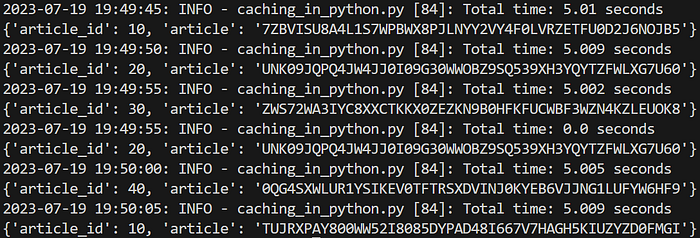

If you run this, you’ll see something like:

which makes sense, because the value is already present in our own cache, so why bother and fetch it from an expensive method.

Caching Strategies

So far, whatever you’ve seen is just a slice of caching, and how straight forward it is to implement it. But there are several flavors to it. Since caching is such an effective tool, we don’t want to overburden it by adding literally everything (maybe a pirated copy of Avengers: Endgame too) into cache, eventually slowing down the whole system.

Instead, an alternative is to implement some sort of strategy which cleans up the cache every now and then based on some principle like frequency or recency.

Going through all of the strategies would be beyond the scope of this article, but I’d highly recommend to read more on this here. To name a few important ones:

1. Least Recently Used

2. Least Frequently Used

3. Time To Live

4. First In First Out

And many others…

Caching In Python

By default, functools, an inbuilt package in python contains methods like cached, cached_property and lru_cache which can be used as is to implement caching more efficiently. An example of lru_cache would be the following, where maxsize=3, forcing the least recently used items to be deleted from cache as new items come along.

Which would give output something like this:

Calling talk_to_me() for 10, 20, 30 takes 5 seconds each.

Post that, as results are cached, calling it again for 20 responds without wasting any time. But that’s not the end. As soon as we add one more item in cache, the cache gets rid of 10.

And hence, finally when we call it again for 10, it takes 5 seconds again.

Other than this, an interesting package which has some advanced strategies implemented is cachetools. This brings me to the final section of the article, the use-case which kind of inspired me to write this article in the first place.

The Payoff

Read the following prologue first:

“Micro-service 01 takes payload as a json which is a list of values (v1, v2, v3…vn). Each value in this list is a separate entity, which combined with an encryption key can be used to fetch secrets from Micro-service 02. Now the values in the payload can be quite repetitive. Also, the data fetched from Micro-service 02 can go stale in x number of seconds, so it needs to be refreshed every now and then.

How to implement all this in such a way that not only the values which for which secrets have already been fetched can be accessed quickly, but also the cache can expire certain entries after some time, so that their fresh values can be obtained?”

To the rescue, comes TTLCache.

TTLCache takes arguments like:

1. maxsize (number of items cached at a time)

2. ttl (how much time to keep old items in cache, before expiring them)

And true, that it might not be applicable in every scenario, but here boy it works like a charm. Following is a dummy implementation of the same:

LAST CODE BLOCK AHEAD, EXIT NEAR THE GATE

Some important methods here:

get_secrets_for_key() is the method which makes use of TTL Cache (expiry in 8 seconds, can hold at max 10 items).

get_secrets_from_expensive_service() imitates an external dependency which takes time to fetch any value

this_runs_every_now_and_then(), well, it runs every now and then whenever cache is expired, to fetch latest set of values.

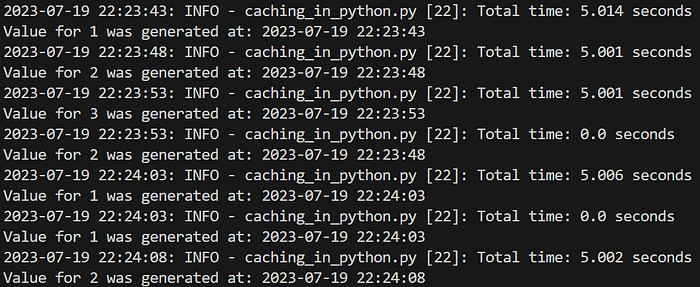

Running the above code will give the following as output:

With an expiry of 8 seconds, you can see that every-time a key is accessed before that duration, the time taken to fetch is 0 seconds assuming its present in cache already.

When this isn’t the case, the expensive method is called which eats 5 seconds for a new key. And finally, when 8 seconds have passed, it fetches new values for every key.

And there you have it, a beginner-friendly guide to using caching techniques and brag to your colleagues how you used something you studied in your OS class.

I’m suddenly watching a lot of crime thrillers these days. Honorable mentions include: Love and Death, The Killing, The Night of…

Mission Impossible 7 might be the one of the best movies of the year.

I recently finished reading this book Stealing Fire by Steven Kotler, and now I’m thinking that I should buy it as well.

And weirdly enough, I’m watching streams of Arpit Bala back to back for reasons I myself am not aware of at the moment.

With that being said/sad, see you in the next one, assuming you actually made it to the end.

:)